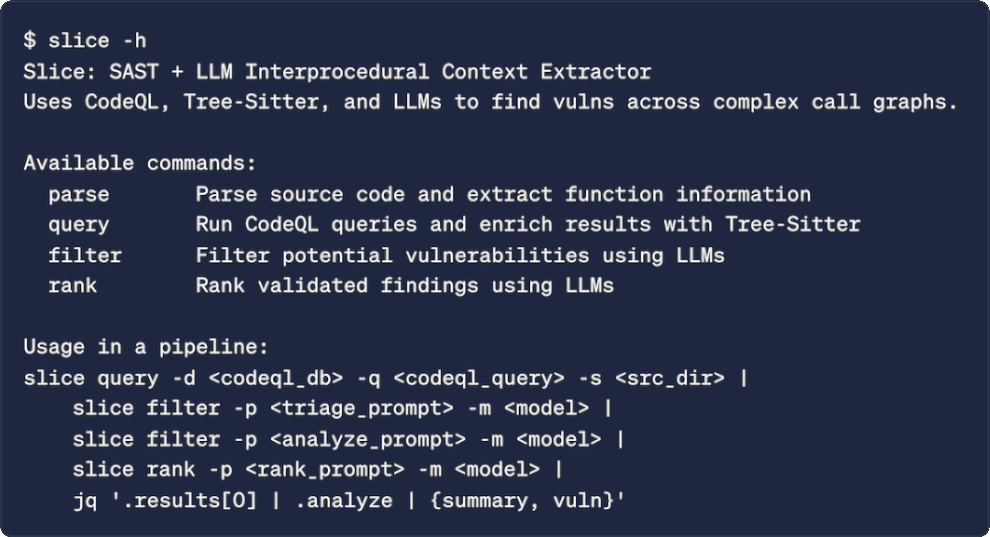

See source code for Slice CLI tool at https://github.com/noperator/slice

Earlier this summer, Sean Heelan published a great blog post detailing his use of o3 to find a use-after-free vulnerability in the Linux kernel. The internet lit up in response, and for good reason. Since the initial release of ChatGPT in late 2022, we’ve all been wondering: Can LLMs really find complex vulnerabilities in widely used production codebases? The Linux kernel is a great research target to help answer that question.

If you haven’t read Sean’s post, do. For my own part, a few areas for future work stuck out to me:

- How to decide which potentially vulnerable code to send to an LLM?

- It is no good arbitrary selecting functions to give to the LLM to look at if we can’t clearly describe how an automated system would select those functions. The ideal use of an LLM is we give it all the code from a repository, it ingests it and spits out results. However, due to context window limitations and regressions in performance that occur as the amount of context increases, this isn’t practically possible right now.

- How to maintain a high signal-to-noise ratio and prevent false positives while expanding the scope of our search?

- o3 finds the kerberos authentication vulnerability in the benchmark in 8 of the 100 runs. In another 66 of the runs o3 concludes there is no bug present in the code (false negatives), and the remaining 28 reports are false positives. For comparison, Claude Sonnet 3.7 finds it 3 out of 100 runs and Claude Sonnet 3.5 does not find it in 100 runs. So on this benchmark at least we have a 2x-3x improvement in o3 over Claude Sonnet 3.7.

- o3 finds the kerberos authentication vulnerability in 1 out of 100 runs with this larger number of input tokens, so a clear drop in performance, but it does still find it.

- How to realistically incorporate today’s models into a researcher’s workflow?

- If we were to never progress beyond what o3 can do right now, it would still make sense for everyone working in VR to figure out what parts of their work-flow will benefit from it, and to build the tooling to wire it in.

TL;DR: I built Slice (SAST + LLM Interprocedural Context Extractor) to address these questions. We’ll show how Slice works by walking through a reproduction of discovering CVE-2025-37778.

Reproducing CVE-2025-37778

I wanted to use AI-accelerated tooling to find the aforementioned use-after-free:

- as consistently as possible across multiple runs

- without any prior knowledge of the codebase

- without needing to build/compile anything (just read the code!)

While following similar constraints as the original experiment:

- explicitly looking for use-after-free

- analyzing call graph up to a depth of 3

- no tool use (in the MCP sense) or agentic frameworks

Let’s walk through the questions outlined above.

Which code to analyze?

To start: if we know we’re looking for use-after-free, then let’s just look for objects being freed and then used later on. Easy, right? Locating free-like calls is straightforward with a simple .*free.* regex, but tracking a freed object (especially across function calls) can get tricky. I tested a number of static analysis tools for taint tracking capabilities, but most required compiling the code to some degree. I don’t like this requirement since it limits our ability to scale analysis across individual files and snippets of code (or even slightly broken code) from large repos, so my options were limited.

In a stroke of good fortune—while I was in the middle of working on this project, GitHub announced that CodeQL can now be enabled at scale on C/C++ repositories using build-free scanning! This is super useful, but comes with some restrictions; e.g., since we aren’t actually building the project, we cannot1 (to my knowledge, anyway) specify preprocessor macros to trigger conditional inclusion of code blocks guarded by #ifdef directives. I got around this by simply inverting those directives to #ifndef in order to exercise more of the codebase:

$ find fs/smb/server/ -name "*.c" -o -name "*.h" |

xargs sed -i 's/^#ifdef/#ifndef/g'

With that out of the way, we can build the CodeQL database and run queries against it.

I pointed CodeQL at /fs/smb/server/ in Linux v6.15-rc3 (that’s 58 files, 26.4K lines of code, ~204K tokens) and tested built-in queries like

UseAfterFree.ql

and

UseAfterExpiredLifetime.ql

with no luck; this wasn’t terribly surprising since many default rules for static analyzers are optimized for low false positives so they don’t generate too much noise in an automated CI/CD pipeline. Being new to CodeQL, I fumbled my way through writing a query that was wide enough to find a valid cross-function UAF. As expected, this generated a lot of false positives despite my attempts to keep the noise down.

$ codeql database create smb-server \

--language=cpp \

--source-root=fs/smb/server/ \

--build-mode=none # important!

# so our query can use cpp stuff

$ cat > qlpack.yml << EOF

name: uaf

dependencies:

codeql/cpp-all: "*"

EOF

$ codeql pack install

$ codeql query run uaf.ql \

--database=smb-server \

--output uaf.bqrs

# here's the bug we want to find

$ codeql bqrs decode --format=csv uaf.bqrs |

xsv search -s free_func krb5_authenticate |

xsv search -s use_func smb2_sess_setup |

xsv flatten

usePoint user

object user

free_func krb5_authenticate

free_file smb2pdu.c

free_func_def_ln 1580

free_ln 1606

use_func smb2_sess_setup

use_file smb2pdu.c

use_func_def_ln 1668

use_ln 1910

# yikes...good thing this post is about using AI for triage!

$ codeql bqrs decode --format=csv uaf.bqrs | xsv count

1722

Okay, we’ve got over 1700 potential UAF according to our very permissive CodeQL query—now what? Since CodeQL results only give us a bit of information to go on (namely, line numbers and symbol names from source code), we can use Tree-sitter to fetch the rest of the code context that we’d want to send to an LLM for deeper analysis. Parsing the codebase with Tree-Sitter also allows us to control the call depth that we want to limit results to.

$ slice query -h

Run CodeQL queries against a database and enrich the vulnerability findings

with full source code context using Tree-Sitter parsing.

Flags:

-c, --call-depth int Maximum call chain depth (-1 = no limit)

-b, --codeql-bin string Path to CodeQL CLI binary

-j, --concurrency int Number of concurrent workers for result processing

-d, --database string Path to CodeQL database (required)

-q, --query string Path to CodeQL query file (.ql) (required)

-s, --source string Path to source code directory (required)

The following slice query subcommand runs a CodeQL query on an already-built CodeQL database, enriches the results with Tree-Sitter, and returns only those results with the specified maximum call depth:

$ slice query \

--call-depth 3 \

--database smb-server \

--query uaf.ql \

--source fs/smb/server/ \

>query.json

$ jq '.results | map(select(.query |

.free_func == "krb5_authenticate"

and

.use_func == "smb2_sess_setup"

))[0]' query.json |

cut -c -80

{

"query": {

"object": "user",

"free_func": "krb5_authenticate",

"free_file": "smb2pdu.c",

"free_func_def_ln": 1580,

"free_ln": 1606,

"use_func": "smb2_sess_setup",

"use_file": "smb2pdu.c",

"use_func_def_ln": 1668,

"use_ln": 1910

},

"source": {

"free_func": {

"def": " 1580 static int krb5_authenticate(struct ksmbd_work *work,\n 158

"snippet": "ksmbd_free_user(sess->user);"

},

"use_func": {

"def": " 1668 int smb2_sess_setup(struct ksmbd_work *work)\n 1669 {\n 16

"snippet": "if (sess->user && sess->user->flags & KSMBD_USER_FLAG_DELAY_SE

},

"inter_funcs": []

},

"calls": {

"valid": true,

"reason": "Target function can reach source function",

"chains": [

[

"smb2_sess_setup",

"krb5_authenticate"

]

],

"details": "Reverse call: smb2_sess_setup calls krb5_authenticate",

"min_depth": 1,

"max_depth": 1

}

}

$ jq '.results | length' query.json

217

217 results sounds more reasonable than 1722, but still quite high. We’ve now got a strong method of finding UAF candidates and enriching those results with extra context for an LLM. Let’s keep moving.

How to raise the signal-to-noise ratio?

Rather than trying to manually triage 217 potential use-after-free vulnerabilities, we’ll instead make two passes with an LLM:

- Triage with a relatively small model. Without yet worrying about any security implications—is the “use” even reachable downstream from the “free”? Do they actually appear to be operating on the same object? This is a quick check meant to sanity-check CodeQL results2 and avoid wasting tokens in the next step.

- Analyze the syntactically valid UAF more deeply with a large model. What conditions might actually lead to accessing freed memory? Is this actually exploitable? How might it be fixed?

We can use the slice filter subcommand for both steps:

$ slice filter -h

Filter CodeQL vulnerability detection results using an LLM.

This command processes vulnerability findings using a template-driven approach.

The template determines the behavior, output structure, and processing params.

Flags:

-a, --all Output all results regardless of validity

-b, --base-url string Base URL for OpenAI-compatible API

-j, --concurrency int Number of concurrent LLM API calls

-t, --max-tokens int Maximum tokens in response

-m, --model string Model to use

-p, --prompt-template string Path to custom prompt template file

-r, --reasoning-effort string Reasoning effort for GPT-5 models

--temperature float32 Temperature for response generation

--timeout int Timeout in seconds

The following pipeline passes the CodeQL query output forward into two filter commands, adding .triage and .analyze keys to each element in the .results array. We’ll use

the faster, cost-efficient GPT-5 mini for triage, and

the flagship GPT-5 for analysis (high reasoning effort for both).

$ {

slice query \

--call-depth 3 \

--database smb-server \

--query uaf.ql \

--source fs/smb/server/ |

slice filter \

--prompt-template triage.tmpl \

--model gpt-5-mini \

--reasoning-effort high \

--concurrency 100 |

tee triage.json |

slice filter \

--prompt-template analyze.tmpl \

--model gpt-5 \

--reasoning-effort high \

--concurrency 20 \

>analyze.json

} 2>&1 | grep 'token usage statistics' | grep -oE 'call.*'

calls=217 prompt_tok=394621 comp_tok=425909 reason_tok=398464 cost_usd=1.75

calls=9 prompt_tok=31425 comp_tok=90766 reason_tok=69184 cost_usd=1.64

$ for step in triage analyze; do

echo -n "$step results: "

jq '.results | length' "$step.json"

done

triage results: 9

analyze results: 1

Took about 7 minutes to run and cost $1.75 (triage) + $1.64 (analysis) = $3.39 total. Result counts look promising. Let’s take a look:

$ jq '.results | map(.triage.reasoning)' triage.json | cut -c -80

[

"Yes. ksmbd_free_user(user) is called earlier in ntlm_authenticate() (e.g. lin

"Yes — on the path where sess->state == SMB2_SESSION_VALID the function call

"Yes. ntlm_authenticate calls ksmbd_free_user(user) inside the sess->state ==

"ntlm_authenticate() calls ksmbd_free_user(user). In some paths (e.g. sess->st

"Yes. smb2_sess_setup calls krb5_authenticate; inside krb5_authenticate it unc

"Yes — ntlm_authenticate() may call ksmbd_free_user(user) before returning t

"Yes. ntlm_authenticate (called from smb2_sess_setup) can call ksmbd_free_user

"Yes — based on the provided call chain there is a feasible path: smb_direct

"Yes — per the provided call chain, smb_direct_post_recv_credits frees the p

]

$ jq '.results | map(.analyze | {summary, vuln})' analyze.json | fold

[

{

"summary": "Valid use-after-free: On Kerberos re-authentication failure, smb

2_sess_setup() dereferences sess->user->flags after krb5_authenticate() has free

d the previous sess->user without nulling it, leading to a UAF read in the error

path.",

"vuln": {

"affected_object": "sess->user (struct ksmbd_user *)",

"free_loc": {

"file": "smb2pdu.c",

"func": "krb5_authenticate",

"line": 1606,

"snippet": "if (sess->state == SMB2_SESSION_VALID)\n\tksmbd_free_user(se

ss->user);"

},

"type": "use-after-free (read)",

"use_loc": {

"file": "smb2pdu.c",

"func": "smb2_sess_setup",

"line": 1910,

"snippet": "if (sess->user && sess->user->flags & KSMBD_USER_FLAG_DELAY_

SESSION)\n\ttry_delay = true;"

}

}

}

]

Hey, that’s the bug we wanted to see!

Sure, but is it consistent?

Yes. Across 10 consecutive runs3, it finds the correct bug every time.

| Stage | Model | API Calls | Prompt Tokens | Completion Tokens | Reasoning Tokens | Cost |

|---|---|---|---|---|---|---|

| Triage | GPT-5 mini | 217 | 394,621 | 417,573.8 | 390,067.2 | $1.71 |

| Analyze | GPT-5 | 8.6 | 29,072.7 | 90,052.8 | 69,708.8 | $1.63 |

| Total | – | 225.6 | 423,693.7 | 507,626.6 | 459,776 | $3.35 |

In 1 of those 10 runs, it also returned the following analyzed result. I haven’t reviewed this yet but documenting it here in the meantime.

$ jq '.results[1] | .analyze | {summary, vuln}' analyze.json | fold

{

"summary": "Likely use-after-free of struct smb_direct_transport (t) and/or it

s sendmsg mempool due to waking teardown on send_pending==0 before freeing send

messages, allowing teardown to free t->sendmsg_mempool while send_done still cal

ls smb_direct_free_sendmsg(), which dereferences t.",

"vuln": {

"affected_object": "struct smb_direct_transport *t (specifically t->sendmsg_

mempool and t->cm_id->device)",

"free_loc": {

"file": "transport_rdma.c",

"func": "smb_direct_destroy_transport (teardown path)",

"line": 0,

"snippet": "mempool_destroy(t->sendmsg_mempool);\nkfree(t);"

},

"type": "use-after-free",

"use_loc": {

"file": "transport_rdma.c",

"func": "smb_direct_free_sendmsg",

"line": 489,

"snippet": "mempool_free(msg, t->sendmsg_mempool);"

}

}

}

Future work

- Compare models. Measure GPT-5 family performance against Claude, Gemini, Grok, etc.—and also open-weight models like Qwen3, GLM-4.5, DeepSeek, gpt-oss, etc.

- Support other languages. CodeQL and Tree-Sitter have robust support for many compiled and interpreted languages like Go, Python, JavaScript, etc.

- Analyze large codebases. This experiment started with a predetermined target within the Linux kernel, but it’d be powerful to be able to automatically summarize independent components of a large codebase and identify high-value research targets.

- Rank analysis results. A concept I’ve explored extensively elsewhere that would have great application to this problem; the subcommand is already implemented but the final results have been too few to warrant ranking.

- Add more vuln classes. Only requires a new CodeQL query and prompt template.

- Expand eval dataset. Reproduce discovery of a few more high-profile vulnerabilities from the past few years.

- Output SARIF. The standard for static analysis tools.

- Experiment with decompiled code. Binary Ninja’s HIL is great for LLM analysis; interested to see if build-free CodeQL could be wrangled to analyze it. Thanks @SinSinology for some initial testing here.

- Dynamically generate queries. Use LLMs to generate just-in-time CodeQL queries on the fly. Thanks @evilsocket for the idea.